Learning Human-environment Interactions using Conformal Tactile Textiles

Publication

Nature Electronics

Authors

Yiyue Luo, Yunzhu Li, Pratyusha Sharma, Wan Shou, Kui Wu, Michael Foshey, Beichen Li, Tomás Palacios, Antonio Torralba, Wojciech Matusik

Abstract

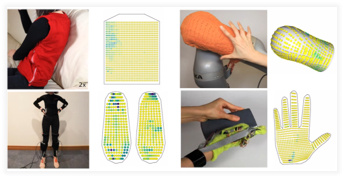

Recording, modelling, and understanding tactile interactions is important in the study of human behaviour and in the development of applications in healthcare and robotics. However, such studies remain challenging because existing wearable sensory interfaces are limited in terms of performance, flexibility, scalability, and cost. Here we report a textile-based tactile learning platform that enables recording, monitoring, and learning of human–environment interactions. The tactile textiles are created via digital machine knitting of inexpensive piezoresistive fibres, and can conform to arbitrary three-dimensional geometries. To ensure that our system is robust against variations in individual sensors, we use machine learning techniques for sensing correction and calibration. Using the platform, we capture diverse human–environment interactions (more than a million tactile frames), and show that the artificial intelligence-powered sensing textiles can classify humans’ sitting poses, motions, and other interactions with the environment. We also show that the platform can recover dynamic whole-body poses, reveal environmental spatial information, and discover biomechanical signatures.